Axiell Intelligence (AI) and Collections

Axiell Intelligence (AI) is an optional paid feature for institutions with a hosted or on-premises installation of Axiell Collections version 2.0 onwards.

Implementation requires Axiell to configure your Collections application and connect it to our proprietary AI service, which manages the entity extraction process.

For the moment, Axiell Intelligence is only available for English language content.

Please contact Axiell Sales for more information.

Axiell Intelligence is an optional feature designed to enrich your Collections data by leveraging AI to identify entities (people, places, dates, and so on) in your unstructured content and write-back structured keyword metadata linked to authority records and Wikidata.

The core concepts of Axiell Intelligence are Entity Extraction (also known as Named Entity Recognition) and Entity Linking. The first identifies entities within unstructured text in nominated fields, and classifies them into predefined categories such as individuals (people’s names), organizations (names of companies, institutions, or groups), locations, dates, times, and more. Structured metadata in the form of keywords (names, terms, etc.) is then written back to the source records, linked to authority![]() Authority data sources are used for vocabulary control and they manage the many names and terms referenced by records in almost every other data source. The purpose of a controlled vocabulary is to ensure consistent use of names and terms throughout your records and this is achieved by specifying that a name or term is preferred; then when a non-preferred version of a name or term is selected in a Linked field drop list, it is automatically replaced with the preferred name or term. records in Persons and institutions and the Thesaurus (created on-the-fly if necessary) and to Wikidata.

Authority data sources are used for vocabulary control and they manage the many names and terms referenced by records in almost every other data source. The purpose of a controlled vocabulary is to ensure consistent use of names and terms throughout your records and this is achieved by specifying that a name or term is preferred; then when a non-preferred version of a name or term is selected in a Linked field drop list, it is automatically replaced with the preferred name or term. records in Persons and institutions and the Thesaurus (created on-the-fly if necessary) and to Wikidata.

Axiell Intelligence's AI is able to process data faster and more efficiently than we can, while enabling human oversight to ensure an ideal combination of machine and human intelligence. The end result is enriched data with interlinked records, providing improved context for staff, researchers, and the public.

Like Wikipedia, Wikidata is free and open, but rather than a collection of articles intended primarily to be read by humans, it is a knowledge base that is intended to be read and edited by humans and machines. Wikidata is a source of open data that other projects, including Wikipedia, can use to enrich their services. The basic building block of Wikidata is an item, which represents any kind of real-world topic, concept, or entity that is uniquely identified.

Wikidata stores data in a structured format, allowing for easy retrieval and interlinking with other datasets on the web. Each item in Wikidata is identified by a unique identifier known as a QID (e.g. Q5686 for Charles Dickens).

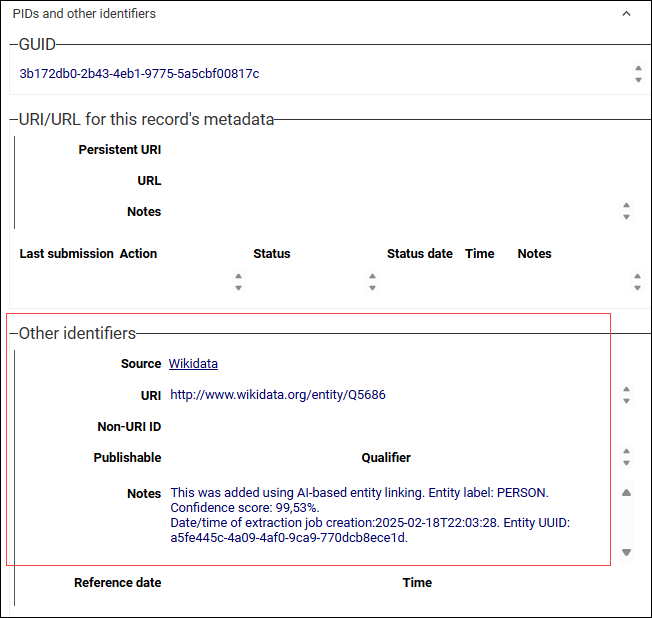

When Axiell Intelligence locates an entity in your Collections content, let's say it finds the name Charles Dickens, and matches it to an entity in Wikidata, on approval of the match the AI tool writes back data to an authority record in Persons and institutions, including a link to the Wikidata entity. As we see here, the URI![]() Uniform Resource Identifier - a string of characters used to identify a resource on the internet. It can refer to both abstract and physical resources, such as web pages, email addresses, or files. URIs are essential for locating and interacting with resources on the web. includes the unique QID:

Uniform Resource Identifier - a string of characters used to identify a resource on the internet. It can refer to both abstract and physical resources, such as web pages, email addresses, or files. URIs are essential for locating and interacting with resources on the web. includes the unique QID:

The process begins with the selection of one or more records in the Catalogue and pushing content from a pre-defined set of fields into the AI tool for analysis. Default sets of fields are provided out-of-the-box but these can be customized as necessary in the System variables data source (details here); in the Object catalogue (in the Standard Model) they include Title (TI), Description (BE), Content description (CB), Creator history (pa) and Physical description (PB). AI analyses the unstructured content to identify entities and match them to corresponding entities in Wikidata. It does this not only by looking for individual words but also considering the context in which those words appear.

What follows is a process of validation and disambiguation of name variants to ensure as far as possible that the correct entity match has been identified: have we matched the correct John Smith?; does Amazon match the river or organization?; is the city of Melbourne referenced in our record a city in Victoria, Australia or Florida, USA?; and so on. The end result is that structured metadata is written back to the source records in the form of keywords linked to existing authority records in Persons and institutions and the Thesaurus (or created if necessary), which are themselves linked to a relevant entity topic in Wikidata.

Again, default sets of fields are provided for writing-back the structured metadata, and these too can be customized as necessary; in the Object catalogue these are fields in the Associations panel (Subject (kp) for subject keywords, Name (kj) for people, and so on).

As we'll see, the validation stage can be a manual or automated process. A manual review involves checking each matched entity and approving or rejecting the match. The automated approach is recommended for bulk projects and it involves setting a certainty threshold (say, 90%). If AI analysis indicates that a match meets or exceeds this threshold, it is automatically approved. Whenever AI is used to extract entities and write back structured metadata to your records a disclaimer is added to every keyword extracted in this way, indicating that AI was used in the matching process: the disclaimer identifies the origin of the keyword, the field and character position it was extracted from, its confidence score, and the date and time of extraction.

Before we step through how to use the AI tool (here), let's first take a look at what it does:

Example

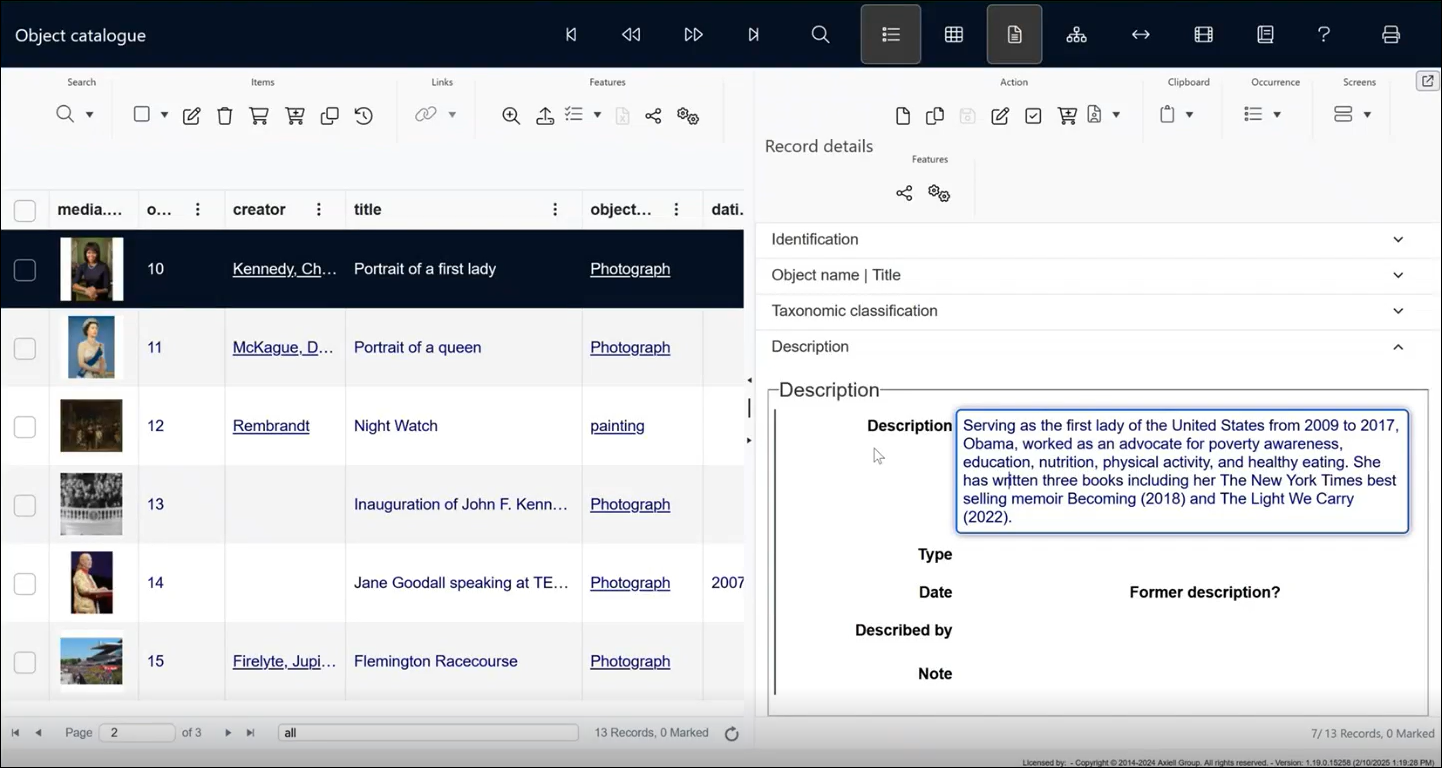

Consider an Object catalogue record for a photograph of Michelle Obama, titled (TI) Portrait of a first lady and with a description (BE) of:

Serving as the first lady of the United States from 2009 to 2017, Obama, worked as an advocate for poverty awareness, education, nutrition, physical activity, and healthy eating. She has written three books including her The New York Times best selling memoir Becoming (2018) and The Light We Carry (2022).

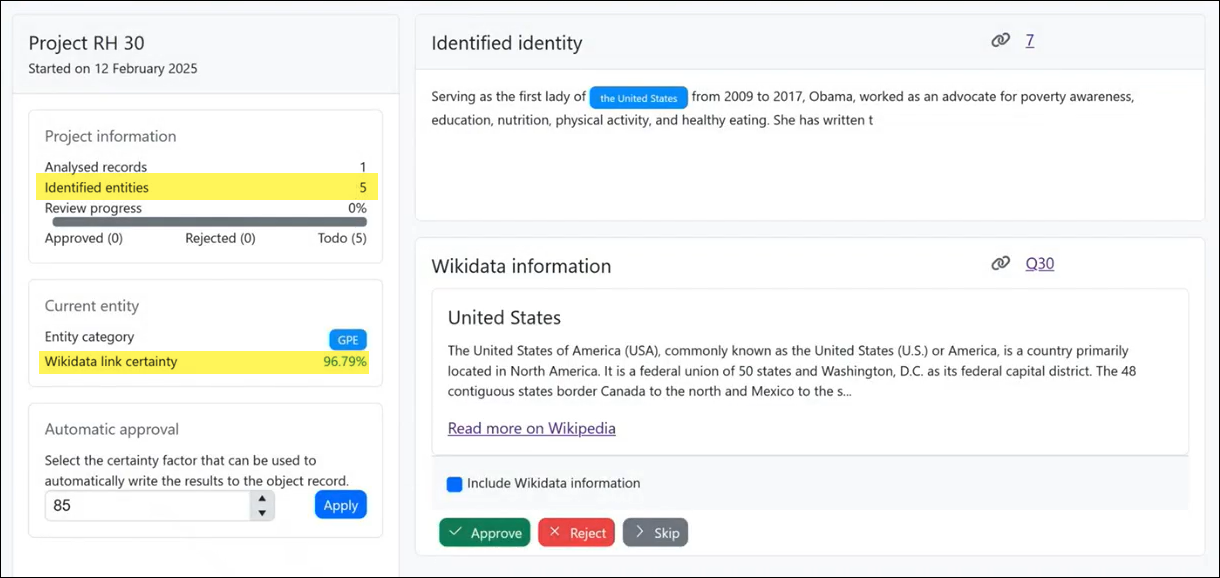

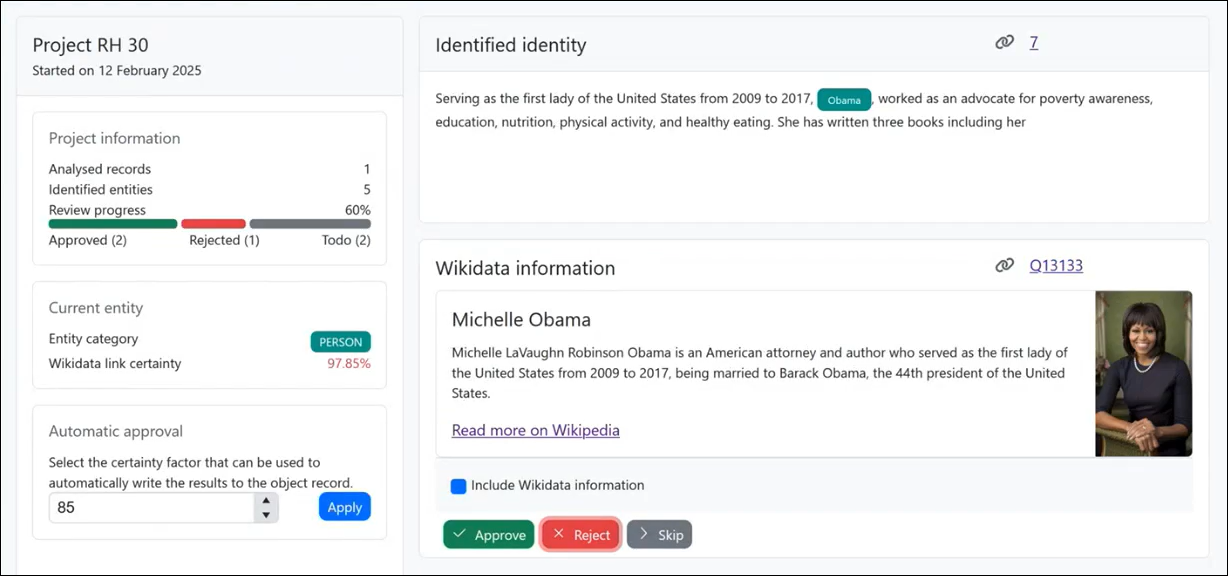

When this record is sent to the AI tool for analysis, five entities are identified, as indicated in the Project information block in the left column:

The first entity found in the content is the United States, and this is matched to an entity in Wikidata for United States with a QID of Q30 (the QID is a hyper-link and you can click it to view and review the Wikidata page); as we see in the Current entity block in the left column, the AI tool calculates that this match is correct with a certainty of 96.79%. Note that an Entity category of GPE has been determined; the Entity category is, broadly speaking, the domain to which the entity belongs, and in this case identifies a Geopolitical Entity. Approving this match will move to the next entity located, The New York Times, and so on, until we reach Obama:

It is worth noting that the Collections content does not mention Michelle, but the AI tool has matched the entity to Michelle Obama by considering the context in which the keyword Obama is found.

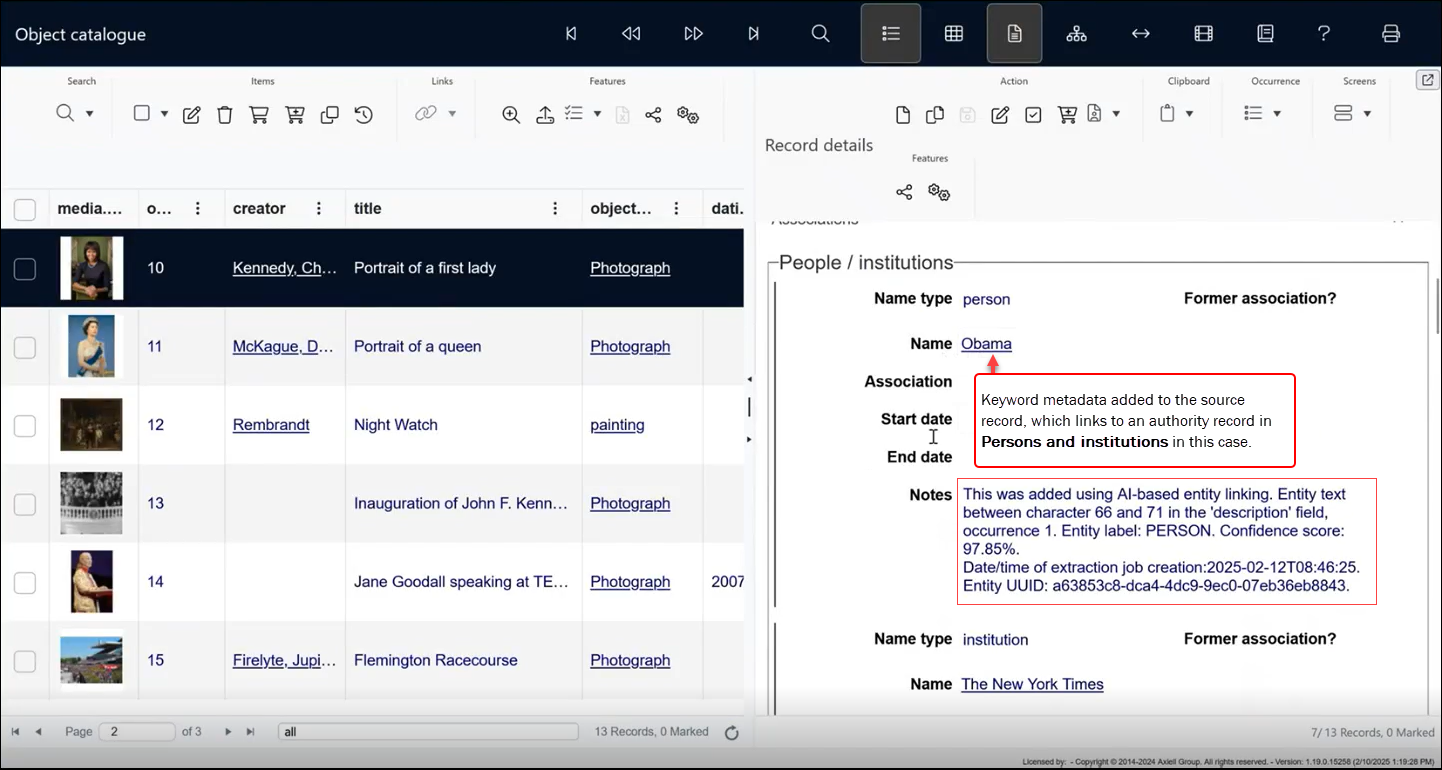

When these entity matches are approved (which can be done manually, as in this example, or automatically), structured keyword metadata is written back to the source record (into the Association fields by default), and links are formed between the source record and any affected authority records in Persons and institutions (for names) and the Thesaurus (for keyword terms); in this case keywords are added for all of the approved matches, including United States, Obama, The New York Times, and so on:

- A disclaimer is added to every keyword extracted in this way, indicating that AI was used in the matching process: the disclaimer identifies the origin of the keyword, the field and character position it was extracted from, its confidence score, and the date and time of extraction.

- In Collections 2.1 onwards, the Wikidata entity title is registered as the term or name in your Collections records.

In Collections 2.0, the entity term located in your source content is used as the keyword; for example, the keyword Obama is added to the Object catalogue record (notMichelle Obama). If a record is created on-the-fly in your Authority data source, Persons and institutions for example, this keyword will be used. - If there was no existing record for Michelle Obama in Persons and institutions, one will be created.

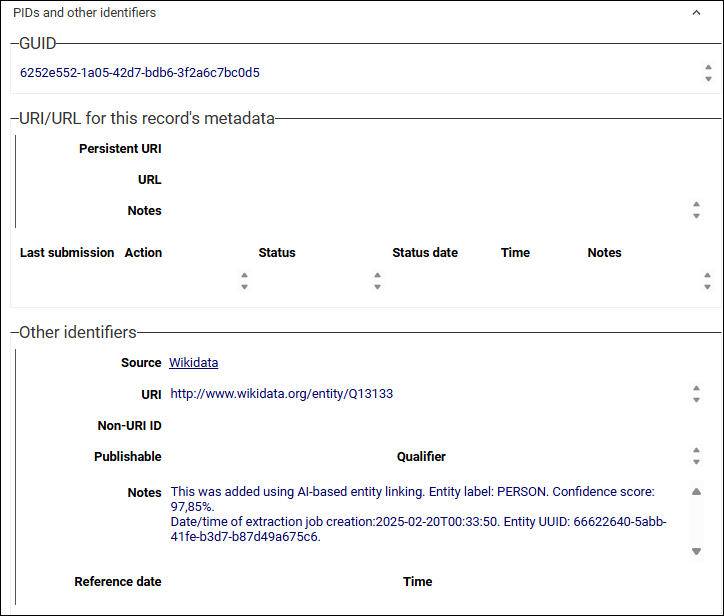

- Details, including a URI

Uniform Resource Identifier - a string of characters used to identify a resource on the internet. It can refer to both abstract and physical resources, such as web pages, email addresses, or files. URIs are essential for locating and interacting with resources on the web. and extraction notes will be added to the PIDs and other identifiers panel in the authority record:

Uniform Resource Identifier - a string of characters used to identify a resource on the internet. It can refer to both abstract and physical resources, such as web pages, email addresses, or files. URIs are essential for locating and interacting with resources on the web. and extraction notes will be added to the PIDs and other identifiers panel in the authority record:Duplicate records

If there is already a record for Michelle Obama in Persons and institutions but it does not include a URI to a relevant Wikidata entity, a duplicate record will be created with the URI; if there is an existing record for Michelle Obama and it does include a URI to a relevant Wikidata entity, that authority record will be used and linked to from the source record.

A future release of the AI tool will be able to process records in your Authority data sources; running the AI tool on your authority records first will add a URI link to a relevant Wikidata entity and extraction notes to affected Persons and institutions or Thesaurus records.

Read on for details about how to use the AI tool.